ChatGPT: First Look

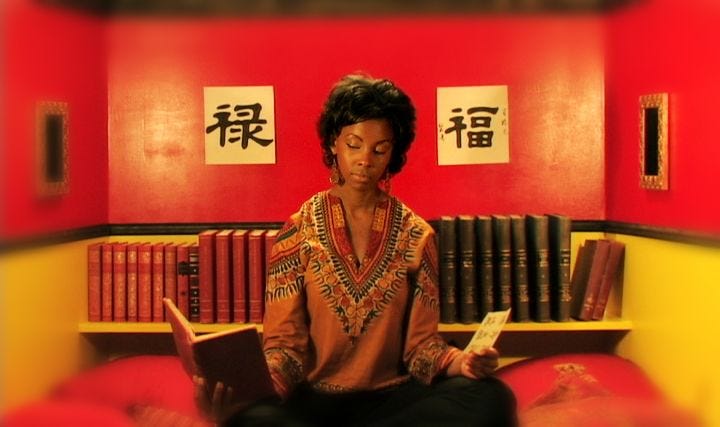

My favorite Chinese Room.

I don’t know where or when I was listening to doomspeak about AI this past week, but I do recall that the announcer was recommending movies like Her and Ex Machina. I found both of those films rather sad in exactly the way they aimed to be prophetic. They cater to the tastes of the pop culture in a kind of consumerist way rather than in a classic kind of way. I can’t express ‘classic’ better than the film Robot and Frank.

Sorry to come at it this way, but I want to be a little self-critical and self-deprecating so that perhaps you can understand why I think there is more than a little bit of pandering in AI, rather the same way there is in Crypto. So my thesis statement works a little bit like this.

Q: Why doesn’t AI take over the world?

A: Because my corporation wrote it and we built it such that we will always be in control of what it does. We want to make huge amounts of money by having it do exactly what we want it to do according to our marketing and sales strategies and we don’t want to be sued.

The question ‘Why doesn’t AI take over the world?’ is predicated on some very old and primitive narratives that have been sustained in American popular culture. Of course the classic question may have been asked and answered well by the old school sci-fi writers. But all of them were eclipsed by Kubrick’s 2001: A Space Oddessey in which the fantastic computer HAL, lost its mind and killed the crew whose lives depended on it. My favorite of these thematic films was Colossus: The Forbin Project.

At any rate, I have been planning to use AIs here at Stoic Observations and it turns out that the new ChatGPT is just the ticket. You may have noticed many of my graphics already use DALL-E. So now I will use ChatGPT to describe some important limits of ChatGPT in order to help all of us avoid it taking over the world.

MDCB: If the majority of material presented to the internet was false when it came to specific current events, how vulnerable would OpenAI products be?

ChatGPT: As a language model trained by OpenAI, I am not able to provide information on specific current events, as my training data is based on text that was input into my system prior to 2021. Additionally, because I am not able to browse the internet or access any external information, I am not able to verify the accuracy of information that I am asked about.

In general, however, it is important to remember that language models like myself are not intended to provide definitive answers to questions. Instead, our purpose is to generate responses that are human-like and that may be useful in providing context or additional information. We are not designed to provide definitive answers or to serve as a reliable source of information on specific current events. It is always important to verify the accuracy of information, regardless of the source, and to consult multiple sources when seeking to understand complex or controversial issues.

MDCB: What oracles does OpenAI use to assure the quality of answers provided?

ChatGPT: As a language model trained by OpenAI, I do not use oracles to assure the quality of my answers. Instead, I am trained using a large dataset of text, which is used to teach me to understand and generate human-like responses to questions. The dataset used to train me includes a wide range of texts, including news articles, books, and other written materials, but it does not include any kind of "oracle" or external source of knowledge that would validate the accuracy of my answers.

MDCB: Describe AI's Oracle Problem.

ChatGPT: The "Oracle Problem" is a term used in the field of artificial intelligence (AI) to describe the challenge of providing AI systems with access to external knowledge or information that they can use to improve their performance. In many cases, AI systems are trained using large datasets of text or other information, but they do not have the ability to access additional information or to verify the accuracy of the information that they have been trained on. This can lead to limitations in the ability of AI systems to provide accurate or reliable answers to questions, particularly when it comes to complex or controversial topics.

One solution to the Oracle Problem is to develop AI systems that are able to access external sources of knowledge, such as databases or the internet, in order to verify the accuracy of their answers or to provide additional context or information. However, this approach raises a number of challenges, including the need to ensure the quality and reliability of the external sources of knowledge, and the potential for bias or other problems in the information that is accessed. As a result, the Oracle Problem remains an active area of research and development in the field of AI.

So there it is in a nutshell, eloquently presented by the AI system itself. What’s impressive is that the language is not particularly dry or clunky. It’s much better than Siri or Alexa. Give it the voice of John Cleese and you’d have something even more dangerous.

The danger we face in our populist world is that the majority are likely to trust their iPhones, and consequently the UX which may or may not be dross but will certainly be articulate. That it is brilliant technology rather than some eminently mockable persona, like Joy Behar or Piers Morgan, will give it more credible longevity. Aside from that, I’ve been on Quora long enough to know how highschoolers and undergraduates troll for the sort of 500 word essays ChatGPT can spew in a few seconds. We will crowdsource articulate rubbish, which of course is quite likely to spam the internet itself thus generating an informational circle jerk.

Preliminary Conclusion

The proper Stoic seeks independent discovery of the nature of the universe and the natural cycles of human temperament. More importantly, we should seek independence in improvement in our skills, including discernment. What we give up for the sake of convenience, we lose in our capacity to debug. It’s lovely to have a driver and a mechanic when you are rich. It’s better to have skills, wrenches and a lift in your own garage. Everything breaks.

Geeky Counterpoint Section

Speaking for myself, I am pleased as all get out that ChatGPT can answer syntactical questions for programming languages, including my favorite, Rust. Over the past 3 years, I have had no practical need for the language despite my admiration for its compiler. I expect that I will save money avoiding expensive books and online tutorials because ChatGPT answers very specific coding questions questions such as the following:

MDCB: How can I reverse a tuple in Rust?

In Rust, you can use the

.rev()method to reverse the order of the elements in a tuple. For example, if you have a tuplemy_tuplewith elements(1, 2, 3), you can reverse the order of the elements like this:

Copy codelet reversed_tuple = my_tuple.rev();

This would create a new tuple

reversed_tuplewith the elements in the order(3, 2, 1).Alternatively, you can use the

std::mem::swap()method to swap the first and last elements of the tuple, and then use the.rev()method to reverse the order of the remaining elements. For example:

Copy codeuse std::mem;

let mut my_tuple = (1, 2, 3);

// Swap the first and last elements

mem::swap(&mut my_tuple.0, &mut my_tuple.2);

// Reverse the order of the remaining elements

let reversed_tuple = my_tuple.rev();

This would create a new tuple

reversed_tuplewith the elements in the order(3, 2, 1).It is worth noting that reversing a tuple may not always be the best approach, depending on the specific problem you are trying to solve. In some cases, it may be more efficient to use an iterator or other data structure to access the elements of the tuple in reverse order, rather than creating a new tuple with the elements in reversed order.

Secondary Qualified Conclusion

Like any system, ChatGPT is built to solve particular problems and the criteria for acceptable performance to the engineers of that system include a large number of assumptions. Understand that ChatGPT is not an expert system, it is a language generation system. There will be strengths, weaknesses and tradeoffs in the product. The trick is not to fear, abuse or become co-dependent on the tool. And of course, don’t you become the tool.

I will thus leave you, unironcally with ChatGPT’s explanation of its own limitation. As clever as it is with language, it is still a Chinese Room:

The Chinese Room argument is a thought experiment in the philosophy of mind, which challenges the idea that a machine can truly understand or have consciousness. The thought experiment imagines a person who is placed in a room and given a set of rules and symbols. The person is then given a series of questions written in Chinese, which they must answer by following the rules and using the symbols. From the outside, it would appear that the person in the room understands Chinese and is able to answer the questions accurately.

However, the thought experiment argues that the person in the room does not actually understand Chinese. They are simply following a set of rules and manipulating symbols, without any true understanding of the meaning of the questions or the answers they are providing. In the same way, the argument goes, a machine that is able to process and manipulate information based on a set of rules may appear to be intelligent, but it does not actually have consciousness or understanding in the same way that a human does.

The Chinese Room argument has been influential in discussions of AI and consciousness, and has been used to challenge the idea that machines can be said to have true understanding or intelligence. However, it has also been criticized for oversimplifying the nature of understanding and consciousness, and for failing to account for the ways in which human cognition is not simply a matter of following rules and manipulating symbols.

We’ll check back in a year or so.