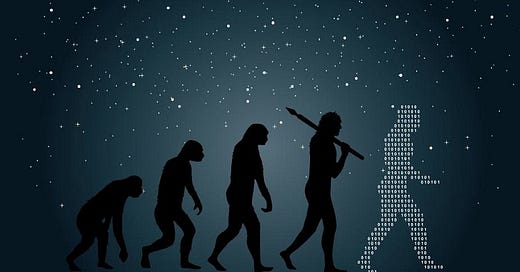

The Evolution of Data Warehousing in the AWS Cloud

Our company has been designing cloud architectures for BI and Data Warehousing for a decade in the Amazon cloud. There are several new…

Our company has been designing cloud architectures for BI and Data Warehousing for a decade in the Amazon cloud. There are several new features we have been implementing over the past several years at my firm as we evolve our own expertise in satisfying our customers. Allow me to share them with you.

Cloud Implementation of Big / Fast / Wide

The first thi…

Keep reading with a 7-day free trial

Subscribe to Stoic Observations to keep reading this post and get 7 days of free access to the full post archives.